# Start with the right question

Customer satisfaction survey questions are small levers that move big systems. Done well, they lift response rates, uncover real friction, and guide product decisions. In one study on mobile surveys, effective wording and flow tripled responses compared to sloppy forms, and the difference showed up in the quality of insights, not just volume. See the breakdown in Userpilot’s overview of mobile surveys: https://userpilot.com/blog/mobile-surveys/ (opens new window).

# Why surveys still matter

Satisfaction surveys are a simple contract. You ask, customers respond, you act. When that loop is tight, trust grows. When it breaks, people stop answering. The fundamentals are well covered by Qualtrics’ customer satisfaction guide: https://www.qualtrics.com/experience-management/customer/satisfaction-surveys/ (opens new window).

What to measure depends on the job you need the data to do:

- CSAT for immediate satisfaction with a touchpoint.

- NPS for loyalty and advocacy.

- CES for effort, the ease to achieve a goal.

Each metric is a lens, not the whole picture. We usually combine them sparingly, then validate with qualitative notes.

# Pick the right question types

Different questions answer different problems. Match the type to your decision.

# Rating scale questions

Use 5 or 7 points. Fewer points speed decisions. Seven gives nuance without fatigue.

- Example: “How satisfied are you with your experience today?” 1 very dissatisfied, 7 very satisfied.

- Use when you need trend lines and benchmarks.

# Binary questions

Fast and clear, but blunt.

- Example: “Did you find what you needed today?” Yes or No.

- Use as a gate, then branch to deeper questions.

# Multiple choice

Good for features or reasons, not feelings.

- Example: “What was the main reason for contacting support?” Options plus Other.

- Keep options mutually exclusive and complete.

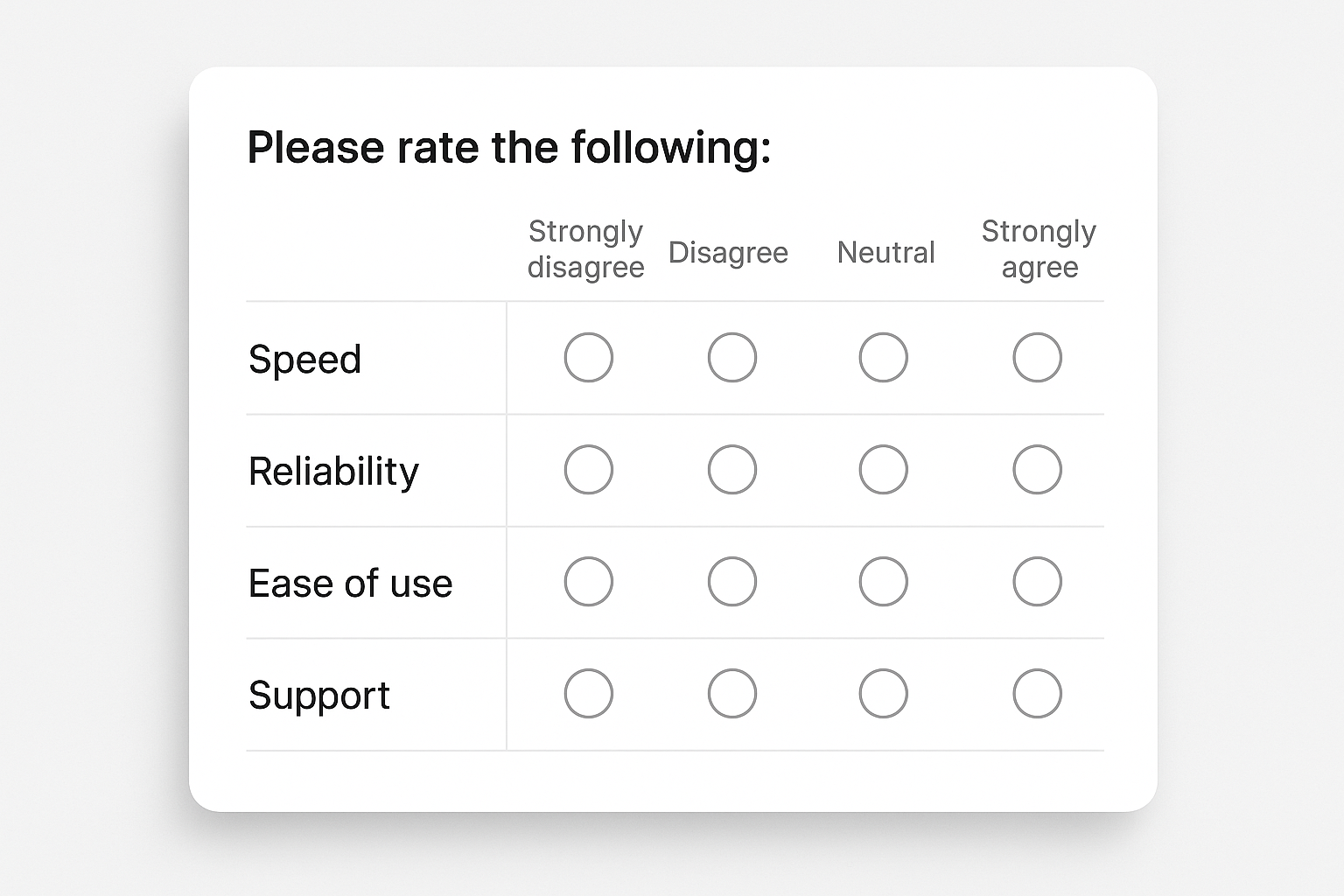

# Likert scale questions

Great for attitudes toward statements.

- Example: “The product is easy to learn.” Strongly disagree to Strongly agree.

- Avoid double ideas in one sentence.

# Open ended

Where the story lives.

- Example: “What nearly made you stop using our product?”

- Place after structured items, then ask for one concise example.

# Wording that earns honest answers

Small edits change outcomes. The goal is neutrality and clarity.

- Remove leading language: not “our excellent support,” just “our support.”

- One idea per question, no doubles like “quality and service.”

- Use everyday words, skip internal jargon.

- Short sentences. Short labels. Precise scales.

- Balance options: equal positives and negatives, clear neutral.

Principle: if a reasonable person could read a question two ways, rewrite it.

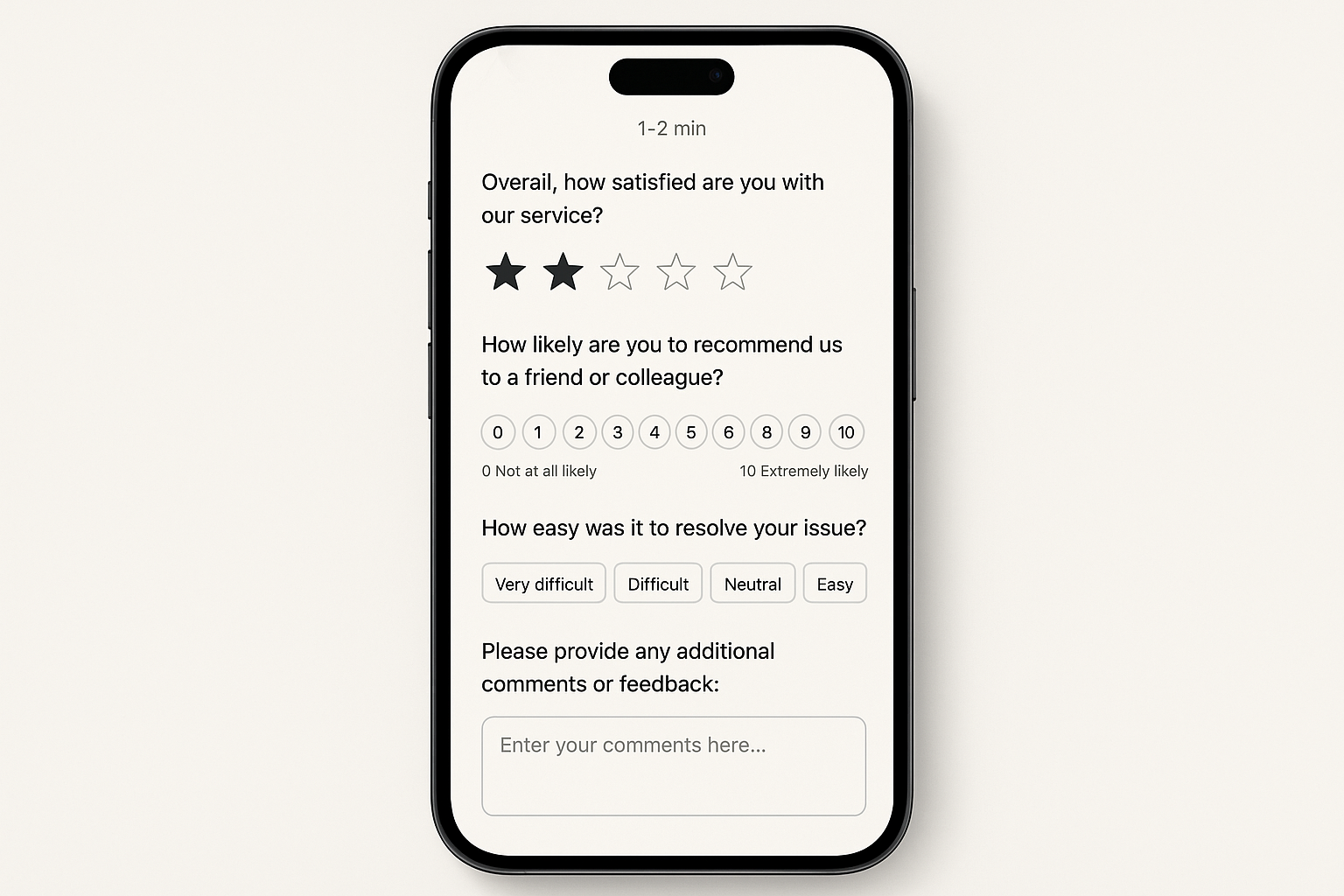

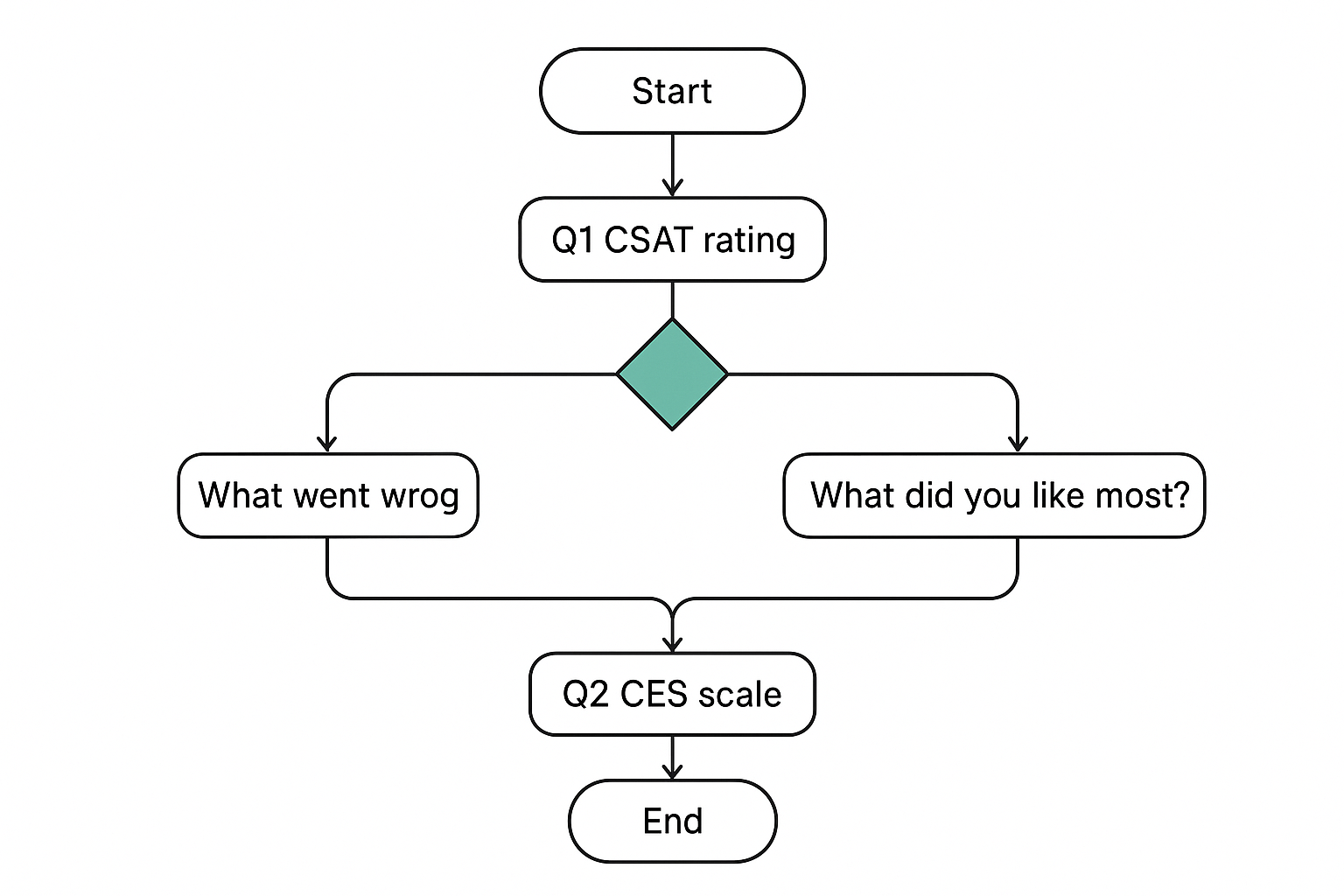

# Structure, logic, and flow

Order shapes answers. Start broad, then narrow. Use skip logic to keep people in relevant territory.

- Open with overall satisfaction or goal completion.

- Branch based on score. Low score, ask what went wrong. High score, ask for highlights.

- End with one open question.

Micro-template:

- “Overall, how satisfied are you with [recent experience]?”

- If 1–3, “What went wrong?” If 6–7, “What worked well?”

- “How easy was it to do X today?”

- “Anything else we should know?”

Takeaway: relevance reduces drop-off and improves candor.

# Mobile-first or bust

Most surveys arrive on a phone. Design for thumbs and short attention.

- Target 2 minutes or less from start to submit.

- Big touch targets, one screen per idea, progress indicator.

- Keep text fields optional, but invite specifics.

- Cut half of what you think you need, then cut again.

For practical tactics that lift completion, see Formbricks’ guide on response rates: https://formbricks.com/blog/increase-survey-response-rate (opens new window). Their advice on introductions, reminders, and intrinsic incentives is worth the read.

# Examples by scenario

Use these as starting points. Edit to your voice, product, and journey.

# Post-support interaction

- CSAT: “How satisfied are you with the help you received today?” 1–7.

- CES: “How easy was it to resolve your issue?” 1 very hard, 5 very easy.

- Open: “What could we have done better?”

# In-product moment for SaaS

- Feature fit: “How valuable is [Feature X] for your workflow?” 1–7.

- Reliability: “In the past 7 days, did anything break or feel slow?” Yes or No, then details.

- Direction: “Which one improvement would save you the most time?” MC plus Other.

# Retail or hospitality checkout

- Outcome: “Did you find everything you needed?” Yes or No.

- Staff: “How would you rate staff helpfulness today?” 1–5.

- Open: “If something felt off, what was it?”

# Healthcare visit follow-up

- Communication: “I understood my options and next steps.” Strongly disagree to Strongly agree.

- Wait time: “Was your wait acceptable?” Yes or No, then minutes estimate.

- Open: “One thing we should improve for next time?”

Takeaway: specific context prompts specific feedback you can act on Monday morning.

# How long should your survey be?

Short enough that you would take it yourself. As a rule, five questions or less wins. Mobile completion under two minutes beats longer forms by a wide margin. Again, Formbricks’ data on timing and reminders is practical: https://formbricks.com/blog/increase-survey-response-rate (opens new window).

# Advanced moves for better data

If you already run surveys, this is where gains compound.

- Personalize invites by context. Reference the action they just took.

- Use channel fit. Email for depth, in-app for immediacy, SMS for ultra short.

- Add gentle attention checks, not trick questions.

- Monitor completion time and straight-line patterns. Trim where you see friction.

- Analyze open text with modern tooling to spot themes quickly. A good primer on turning feedback into action is here: https://www.sentisum.com/customer-feedback/actionable-customer-insights (opens new window).

If you want AI-powered summaries, root-cause grouping, and trend detection inside your feedback loop, explore Sleekplan Intelligence: https://sleekplan.com/intelligence/ (opens new window).

# Pitfalls to avoid with quick fixes

- Leading wording: “our excellent service” -> Neutral: “our service.”

- Double-barreled: “quality and service” -> Split into two questions.

- Fatigue from over-surveying -> Centralize a send calendar, space requests.

- Unbalanced scales -> Provide equal positive and negative choices, include neutral.

- Logic bugs on mobile -> Test on real devices before sending broadly.

Principle: if the data could mislead you, the question is broken.

# Measuring survey effectiveness

Beyond response rate, look for:

- Coverage: do responses represent your customer segments?

- Reliability: do related items move together logically?

- Actionability: can a team ship a change next sprint based on the result?

- Business link: do score shifts correlate with retention or revenue?

When in doubt, run a short pilot, then adjust. For a broader framework on turning signal into action, review SentiSum’s playbook on actionable insights: https://www.sentisum.com/customer-feedback/actionable-customer-insights (opens new window).

# Quick checklist

- Goal defined and tied to a decision.

- 3–5 questions, mobile-first, clear progress.

- Neutral wording, single idea per item.

- Balanced scales, plain labels.

- Skip logic for relevance, one open text at the end.

- Test on devices, pilot with a small segment, then ship.

# FAQ

Q: What is a good CSAT question? A: “How satisfied are you with [experience] today?” Use a 5 or 7 point scale with clear anchors.

Q: Where do I place open-ended questions? A: At the end, after commitment builds, with a focused prompt.

Q: 5 point or 7 point scale? A: Use 5 for speed, 7 for nuance. Be consistent over time.

Q: How often should we survey? A: Tie cadence to events, not the calendar. Avoid repeating asks to the same person within a short window. Coordinate across teams.

Q: What response rate is “good”? A: It depends on channel and audience. Track trend lines for your segments. Improve by tightening scope, timing, and relevance.

Final thought: quality questions respect people’s time and attention. When we do that, customers tell us what we need to hear, and we can act with confidence.