Artificial intelligence is no longer a sidecar. AI for product strategy now sits in the driver’s seat, guiding market intelligence, customer insight, and roadmap decisions with clarity. Teams that embed AI into planning report sharp gains in productivity and decision quality, with research showing material lift in output and speed. The point is not to replace judgment, it is to scale it.

# From reactive to predictive

Traditional strategy reacted to the past. AI shifts the posture to predictive and adaptive. Instead of quarterly autopsies, we get continuous signals: competitive moves, sentiment shifts, and usage patterns that update the plan in near real time. Studies on the state of AI adoption point to faster, more confident decisions when models meet human review, not intuition alone, which aligns with findings summarized by McKinsey’s perspective on AI adoption in product organizations.

Principle: let models surface patterns, let people set direction.

# Market intelligence, at the pace of change

Static competitive decks expire quickly. AI-driven competitive analysis tracks live changes across sites, pricing, launches, hiring, and patents, then flags strategic pivots early. See how AI upgrades competitive intelligence in practice via Glean’s overview of AI-enabled monitoring and the Miro guide to AI competitive analysis. Together, they show how to move from manual scanning to continuous signal processing.

What this looks like day to day:

- Monitor messaging updates and pricing shifts automatically, roll up into weekly alerts.

- Parse job postings for early hints on bets, for example, a cluster of ML roles around recommendations.

- Map whitespace: cross-reference competitor features with customer complaints to find unmet needs.

- Forecast likely moves using historical behavior patterns, prepare countermoves before they land.

Takeaway: treat competitive analysis as a live system, not a PDF.

# Customer insight, without the noise

You already have the answers, they are buried in tickets, reviews, and call notes. NLP turns that mess into themes, sentiment, and urgency. Zendesk’s guide to AI in customer feedback shows the mechanics, from intent detection to trend surfacing. The result is a single view of what hurts, what delights, and what should be fixed next.

Use cases that pay back fast:

- Cluster support tickets to spot emerging defects, ship bugfixes within 7 days, not 7 weeks.

- Extract top reasons for churn from call transcripts, pair with product usage to get root causes.

- Quantify impact by segment: which issues block enterprise accounts versus SMBs.

If you want this straight in your workflow, see how Sleekplan Intelligence turns raw feedback into prioritized insights for product and CX teams: https://sleekplan.com/intelligence/ (opens new window)

# Segmentation that mirrors behavior

Firmographics are a start, behavior is the truth. AI-driven segmentation groups customers by how they use your product and the value they realize. Contentful’s summary on AI segmentation highlights why behavior-based cohorts predict adoption, expansion, and churn more accurately than static labels. Build playbooks around these cohorts, then measure lift.

Signals to model:

- Feature combinations used together, for example, export plus API tokens in the same session.

- Time-to-value milestones, such as first workspace created within 24 hours.

- Support intensity per cohort, high-ticket segments often mask design debt.

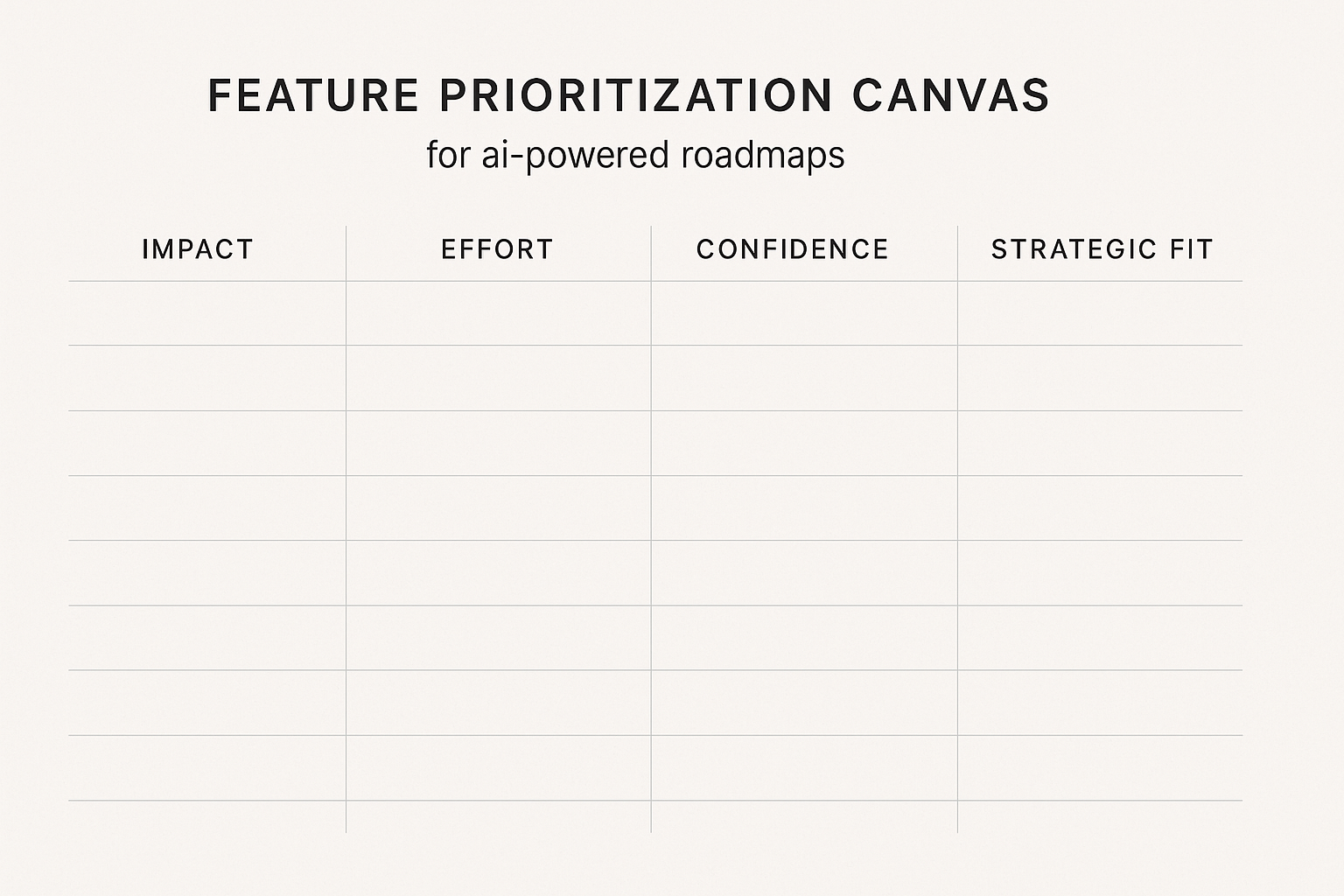

# Roadmap prioritization, without guesswork

Scoring frameworks are helpful, estimates often are not. Train models on historical launches to predict adoption, effort, and risk. Use them to rank backlog items, then let human judgment adjust for context. When the market moves, refresh the stack rank automatically.

Practical workflow:

- Predict impact: match ideas to past launches with similar patterns, estimate likely usage.

- Estimate effort from real delivery data, not gut feel, factor in dependency graphs.

- Recalculate weekly as new feedback, usage, or competitive signals arrive.

- Sequence intentionally: build learning features first to de-risk larger bets.

Takeaway: use AI to narrow the field, use judgment to make the final trade.

# Journey mapping that reflects reality

Most journey maps age on a wall. Connect product analytics, CRM, and support data, then let AI reconstruct real paths: where users stall, which channels convert, which motions drive repeat use. Miro’s guide to AI for journey mapping and WSI’s primer on modern journeys outline the approach. Your job is to turn friction points into backlog items and measure the change.

Focus areas:

- Identify the drop-off after trial day 3, fix the activation step that causes it.

- Locate moments of reconsideration, add in-product nudges or docs precisely there.

- Track cohort-level movement weekly, verify that fixes change behavior.

# Implementation playbook: tools, data, people

Tools: start focused, expand later. A portfolio of specialized systems usually beats a monolith early on. Product Marketing Alliance details a pragmatic rollout sequence that aligns to value. Integrations matter more than logos, design data flow first.

Data: invest in quality and governance early. RSM’s overview of AI governance explains why clean pipelines and clear ownership prevent silent drift. Document sources, refresh rates, and definitions. Bad data compounds.

People: raise data literacy and model intuition across PM, design, and engineering. Product School’s resources for AI-savvy PMs are a good baseline. Create review rituals where models explain themselves, and where humans can veto.

# Go-to-market with predictive confidence

AI helps pick launch windows, pressure test positioning, and select channels. Use predictive models to score launch readiness and success probability, then adjust plan and timing. The Gibion and Pedowitz walkthroughs show how teams forecast adoption and tune messaging before spending budget.

Tactics that work:

- Pre-launch signal review, look for early intent upticks and competitive noise.

- Message testing by cohort, keep the winners, cut the rest.

- Budget allocation that follows response, not internal commitments.

# Measure what moves the business

Tie strategy to outcomes using leading and lagging indicators. Multimodal’s KPI guide and Statsig’s perspective on AI product KPIs lay out sensible sets.

Keep a balanced scorecard:

- Revenue and pipeline lift, with attribution models that connect features to dollars.

- Satisfaction and retention, continuous sentiment plus churn prediction.

- Market position, share and differentiation signals from competitive tracking.

- Adoption and depth of use, feature-level engagement, unexpected usage that hints at new value.

# Guardrails, by design

Bias, opacity, and overfitting are real risks. Follow the NIST AI Risk Management Framework to structure reviews. Favor interpretable models when the decision carries weight. Keep a human in the loop for moves that affect pricing, privacy, or policy.

Practical safeguards:

- Segment-level performance checks, prevent models from underserving key groups.

- Decision logs, record when you accept or override model advice and why.

- Red-team your models quarterly, test for drift and brittle assumptions.

# A simple path to start

Week 1 to 2: pick one high-impact use case, for example, feedback synthesis for prioritization. Wire in data, define success metrics.

Week 3 to 6: operationalize the loop, weekly refresh, review, and one decision shipped from AI insight each week.

Quarter 2: add competitive signals and journey friction analysis, connect to roadmap updates automatically.

# Quick answers

- What is AI for product strategy? Using machine learning and NLP to turn market, customer, and product data into clear decisions on what to build and when.

- Where should I start? Feedback synthesis and roadmap ranking usually return value fastest.

- How do I avoid black-box risk? Prefer explainable models, document decisions, and keep humans in control for critical calls.

Quiet rule: quality over speed, always. The craft is in choosing what not to build, then measuring what you did build with care. AI gives us sharper instruments, judgment decides how to use them.